I wanted Astromech to get to the point where it could provably do the essentials, before talking about the possibilities. In the last few weeks, those essentials have come together.

Data acquisition from commodity image sensors, check. Real-time DSP of the data using Fourier transforms, check. FITS Compression of the data using Harr transforms, finished a few days ago. Distributed comms working. Video comms working. Thousands of lines of '3D visualization' code.

So, what's the point? It's all about mapping reality. Let's take this in stages:

Observation

If you want to see the universe, you need to point a telescope at the sky. There's a great deal of optical and mechanical engineering involved, but you can shortcut that and buy a surprisingly good 6-inch Maksutov-Cassegrain off ebay for a few hundred bucks.

|

| Most of science is about taking picture of fuzzy blobs. Here's my first image of Saturn. |

For most people, this is where the hobby ends. Every now and again the dust gets blown off, they observe Saturn, get their Wows, and no actual science is really achieved.

A smaller cadre of 'serious' amateur astronomers are out every night they can get, some with automated telescopes of surprising power and resolution. Some treat it like a professional photography shoot with less catwalk models and more heavenly bodies, and get quite a good income. But the vast amount of that data just sits on hard drives, not doing science either.

Aquisition

For science to happen, you have to write all the numbers down. Eyes are terrible scientific instruments, but it also turns out JPEG or the h264 compression algorithms are equally bad, literally smoothing out the most important data points.

It's why professional photographers currently make the best amateur astronomers, because they have access to acquisition devices (eg $4k Nikon cameras) which don't apply this consumer-grade degradation.

When you look into the details, what stands out is that the same hardware is often involved, it's the signal processing chain that's different.

Here is where we start having to consider our 'capabilities', in terms of how much CPU, memory, and bandwidth you have. If you point a high-resolution camera at the sky and just start recording raw, you will very quickly overwhelm your storage capacity, even if you have a RAID of terabyte drives.

Trust me on this. Been there, still haven't got the disk space back.

And the sad thing is, if like me you used "commodity" image capture hardware then the data is scientifically useless. Just pretty pictures.

|

| Video imagery of the moon, taken through my telescope in '13. |

Signal Processing

If you want to turn that raw video into useful data, you have to bring some fearsome digital signal processing to bear. Just to clean up the noise. Just to run 'quality checks'. Then there's the mission-specific code (the meteor or comet detector algorithms, if that's what you're doing) and the compression you'll need to turn the torrent into a manageable flow you can actually keep.

Not just to store it to your hard drive. But also to "stream-cast" it to other observers. Video conferencing for telescopes.

Why? Because when strange things happen in the sky, the first thing astronomers do is call each other up and ask "Are you seeing this?". Some of those events are over with in seconds, and some of the greatest mysteries in astronomy persist because, basically, we can't get our asses into gear to respond fast enough.

Have we learned nothing from Twitter?

We can't wait for the data to get schlepped back home, and processed a week later. We need automated telescopes that can get excited, and call in help, while we're over the far side of the paddock having tea with the farmer's daughter.

Ground Truth

Up until now we've just been talking about slight improvements to the usual observer tasks. Stuff that's done already. Making the tools of Professional Astronomers more available to amateurs is nice (and as we've seen, that's really all in the DSP.) but what's the point?

Here we could diverge into talking about an algorithm called "Ray Bundle Adjustment", or even "Wave Phase Optics" but Ray's a complicated guy, so I'll sum it up:

If you want to reconstruct something in 3D, you need to take pictures of it from multiple angles. You probably guessed that already. There's a big chunk of math for how you combine all the images together, and reconstruct the original camera positions and errors. Those are the "bundles" that are "adjusted" until everything makes sense.

The more independent views you have on something, the better. Even for 2D imagery. Even aberrations in the sensors become useful, so long as they're consistent. It can create 'super-resolution".

Beyond that, there's "Light Field Cameras", which use a more thorough understanding of the nature of light to produce better images - specifically that traditional image sensors only record half the relevant information from the incoming photons.

Most camera sensors record - for each square 'pixel' of light - how much light fell on the sensor (intensity) and it's colour (wavelength). What you don't get is the direction of the incident photon, (it's just assumed) or its phase.

For a very long time we thought those other components weren't important, mostly because the human eye can't resolve that information. Insects can perceive these qualities, though. Bees can see polarization, and compound eyes are naturally good at encoding photon direction. We couldn't, so we didn't build our telescopes or optical theory with that in mind.

Plus, the math is hard. You have to do the equivalent of 4D partial Fourier transforms. Who wants that?

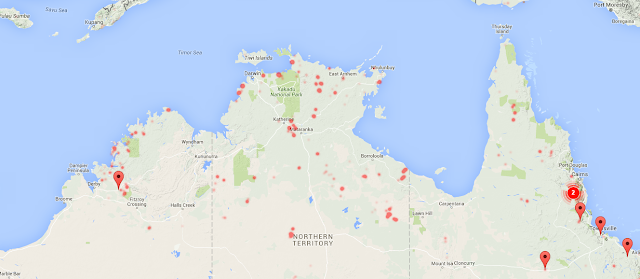

But when you work through it, you realize that you can consider every telescope pointed at the sky to be one element of a planet-wide wave-phase optics "compound eye" with the existing hardware. (and maybe a polarization filter or two)

All we need to do (ha!) is connect together the computers of everyone currently pointing a telescope at the sky, and run a global wave-phase computation on all that data, in real-time. (I might be glossing over a few minor critical details - learn enough to prove me wrong.)

This is not beyond the capability of our machines. Not anymore. The hardware is there. The software isn't. This is what I've been working on with Astromech. A social data acquisition system that assumes you're not doing this alone.

What you get out of this is "Ground Truth", a term that mostly comes from the land-surveyors who are used to pointing fairly short-ranged flying cameras at a very nearby planet. But it's the same problem.

This is the stage we can finally say we're "Mapping." Once we got enough good photos of the asteroid Ida, we constructed a topographical map. Once we got enough information on it's orbital mechanics, we could predict where it would be.

Fundamentally, that allows us to prove our mastery of the maps by asking questions like "If I point a telescope at Ida right now, using these co-ordinates, what would I see?"

ie: Can I see their house from here?

Simulation

To really answer that question means you have a 3D-engine capable of rendering the object using all known information. If we assume Ida hadn't changed much in terms of surface features, then it's pretty easy to "redraw" the asteroid at the position and orientation that the orbital mechanics says.

Then you just apply all the usual lighting equations, and you'll have a damn passable-looking asteroid on your screen.

But it's not 'real' anymore. Not exactly. It's not an image that anyone has taken in reality. It's a simulation. A computer-enhanced hallucination. A flight of the imagination.

Good simulations encode all the physically relevant parameters, and the main point of them is to provide a rigorous test of the phrase "That's funny..." ("How funny is it, exactly?")

Because by now humans are pretty good at predicting the way rocks tumble. It's kind of our thing. When rocks suddenly act in a way other than predicted (than simulated) it indicates that we've got something wrong. Or something interesting is going on.

And being wrong, or finding something interesting; that's Science!

Simulations are also the only way that most of us are ever going to "travel" to these places. Thankfully our brains are wired such that we can hang up our suspenders of disbelief long enough to forget where we are. Imagination plays tricks on us. There are people right now (in VR headsets viewing Curiosity data) who've probably forgotten they're not on Mars.

Used the right way, that's a gift beyond measure.

Sentinels

About the most interesting events we can see is stars blinking on and off when they shouldn't be.

Yes, this happens. A lot. Sometimes stars just explode.

Then there's all kinds of 'dimming' events that have little to do with the star itself, just something else passing in front of it. We tend to find exoplanets via transits, for example. Black holes in free space create 'gravitational lenses' that distort the stars behind them like a funhouse mirror, and we might like to know where they are, exactly.

Lets say we wanted to watch all the stars, in case they do something weird. That's a big job. How big?

Hell, if we just assign the stars in our galaxy and we get every single person on the planet trained as an astronomer, then each person has to watch vigil over 20 stars. (assuming they could see them at some wavelength.) If we're assigning galaxies too, then everyone gets 10,000 of those.

Please consider that a moment. If every human were assigned their share of known galaxies, you'd have 10,000 galaxies to watch over. How many do you think you'd get done in a night? How long 'till you checked the same one twice and noticed any upset?

We're gonna need some help on this.

And really, there's only one answer. To create little computer programs, based on all our data and simulations and task 200 billion threads to watch over the stars for us, and send a tweet when something funny happens.

We can't even keep up with NetFlix, how the hell are we going to keep up with the constantly-running terra-pixel sky-show that is our universe?

I've got a background in AI, but I'll skip the mechanics and go straight to the poetics; we will create a trillion digital dreamers - little AIs that live on starlight, on the information it brings, who are most happy when they can see their allocated dot, and spend all their time imagining what it should look like, and comparing that against the reality. Some dreamers expect the mundane, others look for the fantastic, and bit by bit, this ocean of correlated dreamers will create our great map of the universe.

Every asteroid. Every comet. Every errant speck of light. Every solar prominence or close approach. We are on the verge of creating this map, and the sentinels who will watch over the stars for us, to keep it accurate.

There's not a lot of choice.